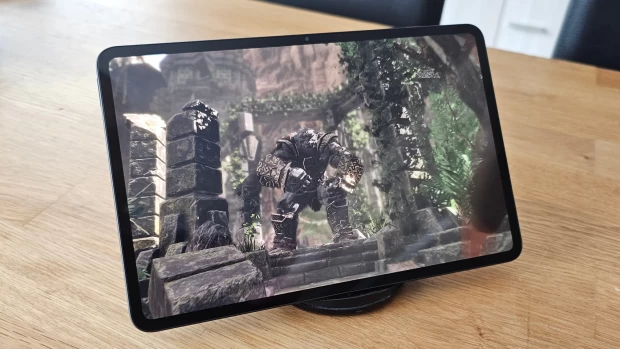

Could Microsoft's new dev team allow games like Halo: Spartan Assault to work on non-Microsoft platforms?

Microsoft has been trying to convince game developers to make their titles on the company's platforms for some time. The results of those efforts have been mixed so far, but this week, a new listing on the Microsoft Careers website claims that the company is serious about bringing game creators over to their side.

How will they do this? According to the Careers listing for a Software Development Engineer, Microsoft is forming a team called "New Devices and Gaming" that wants to make a way to create games on multiple platforms, including those not controlled by Microsoft.

The goal of Microsoft's new team is pretty straightforward; it wants to "win back our game developers from our competitors." The listing adds, "We will create a modern framework that is open source, light-weight, extensible and scalable across various platforms including Windows Store, Windows Phone, iOS and Android."

Yes, Microsoft says it wants to make a way to create games that will be an open source solution. This is nothing new for the company; it has supported open source software in the past and has released certain products to that community, such as the recent NewsPad blogging tool. However, this new effort seems to be a step up from these previous projects.

Exactly how this will work is still a mystery, but Microsoft might reveal more information in a few weeks as part of its activities at the 2014 Game Developers Conference, to be held in San Francisco later this month.

Source: Microsoft Careers via WinBeta | Image via Microsoft

50 Comments - Add comment