In our previous article, we covered the performance impact anti-malware products like Microsoft Defender, McAfee, and Norton, among others, can have on systems and how they rank against one another. In this, we look at the protection capabilities they bring since a mild to moderate hit to system snappiness won't be excusable if a security app isn't able to defend against threats.

AV-Comparatives and AV-TEST released their findings in their latest reports and they were carried out on Windows 10 and Windows 11 respectively. The former published two separate very comprehensive articles: Real-World Protection test, and Malware Protection test.

AV-Comparatives explains how the Real-World Protection Test works:

Our Real-World Protection Test aims to simulate real-world conditions as experienced every day by users. If user interactions are shown, we choose “Allow” or equivalent. If the product protects the system anyway, we count the malware as blocked, even though we allow the program to run when the user is asked to make a decision. If the system is compromised, we count it as user-dependent.

And here is what the Malware Protection test is:

The Malware Protection Test assesses a security program’s ability to protect a system against infection by malicious files before, during or after execution.

The methodology used for each product tested is as follows. Prior to execution, all the test samples are subjected to on-access and on-demand scans by the security program, with each of these being done both offline and online.

Any samples that have not been detected by any of these scans are then executed on the test system, with Internet/cloud access available, to allow e.g. behavioural detection features to come into play. If a product does not prevent or reverse all the changes made by a particular malware sample within a given time period, that test case is considered to be a miss. If the user is asked to decide whether a malware sample should be allowed to run, and in the case of the worst user decision system changes are observed, the test case is rated as “user-dependent”.

The Real world test consists of a total of 512 test cases whereas the Malware Protection test has over 10,000, 10,007 to be precise. The images below are those of the latter.

Microsoft Defender continues to be mediocre in the offline category. This has been noticed in previous assessments as well, though it more than makes up for it in the online detection and protection rates. Aside from Defender, McAfee too was very unimpressive in the offline detection test, and Kaspersky was barely ahead.

There were five false alarms in the case of Defender, which is somewhere around in middle of the ranking. The highest false alarms were given by F-Secure. Among the more popular products, McAfee and Norton were among the worst ones with 10 and 12 false alarms respectively.

|

|

Moving over to AV-TEST, most of the tested products perform similarly to each other. However, there is a major outlier among them.

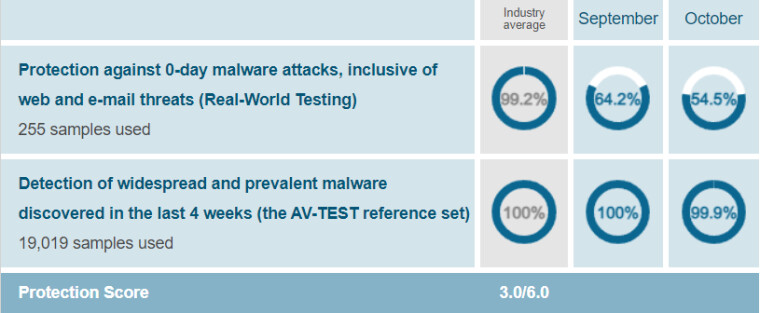

Bkav, which is a Vietnam-based antivirus product, is a fairly new participant in AV-TEST's evaluation and it's clear that it has not fared all that well. The Bkav internet security AI has received 3.0 out of 6.0 points in the Protection category and 3.5 out of 6.0 in Usability, making it one of worst performers of all time as AV-TEST very rarely puts scores below 4.5.

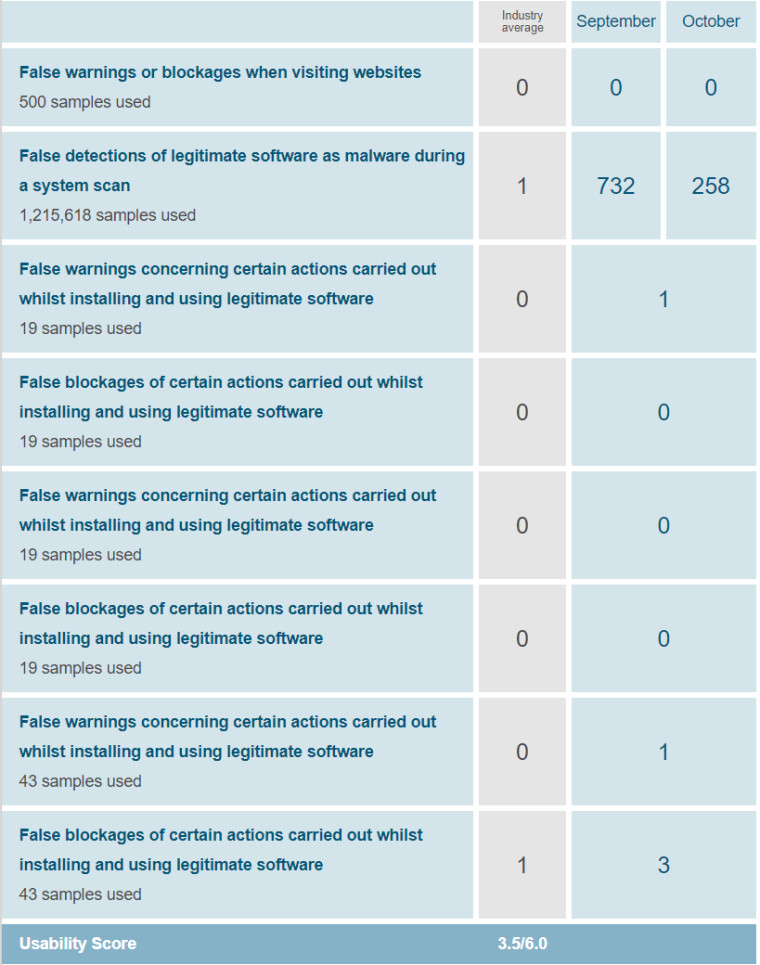

In case you are wondering, the Usability score is meant to gauge inconveniences and annoyances caused by an anti-virus product. Hence, things like false alarms are counted in this category.

Once we look at the breakdown of Bkav's scores it is easy to understand why. Bkav clearly had a very hard time dealing with zero-day malware with a score of just 54.5%, which is nearly half of the industry average of 99.2%. What's worse is that the score is lower this month compared to that of September.

|

|

Meanwhile, in the usability category, 258 false positive detections were measured compared to the industry average of just 1. Bear in mind though that this score is much better than the previous month's 732 and so the devs seem to be on the right path.

17 Comments - Add comment