Last month, Microsoft launched an experimental AI chatbot on Twitter called Tay, allowing users to interact with it and discuss any topic of their choosing. The deployment was intended to boost Tay's machine learning capabilities - "the more you talk the smarter Tay gets", Microsoft said - but the experiment went badly wrong as Tay quickly turned into a racist, sexist, homophobic lunatic, spouting all sorts of appalling and shocking sentiments.

Microsoft apologized for the "unintended offensive and hurtful tweets" from Tay, although it placed the blame on a "coordinated attack by a subset of people". The heart of the problem, clearly, was that Tay - lacking any sense of moral or social propriety - simply responded to whatever topics were presented to it.

So it's perhaps not surprising that Microsoft has apparently implemented some precautions with its latest AI experiment, CaptionBot. The idea behind it is simple: you upload a picture, and the bot attempts to describe what's in the image.

However, there are some limitations, the most notable of which is that CaptionBot won't go near any images in which it recognizes Adolf Hitler's face. If it identifies Hitler in an image, the bot will simply spit out an error message: "I'm not feeling the best right now. Try again soon?"

The same error message will appear no matter how many times you try again, which suggests that Microsoft has prevented CaptionBot from attempting to describe the contents of those images. Lower-resolution images in which Hitler is not so easily identifiable by the bot might still get through, though.

This isn't entirely surprising, as Business Insider notes, given that some of the most offensive, and widely circulated, tweets that Tay spat out were anti-Semitic - including Holocaust denial - or otherwise favorable towards Hitler.

CaptionBot was developed by the team behind Microsoft's Cognitive Services framework, which the company proudly showed off at its Build 2016 developer conference last month. One of the most impressive, and inspiring, projects built using some of the 22 new intelligence APIs that are part of that framework was Seeing AI, a remarkable app that can help blind people to 'see' the world around them.

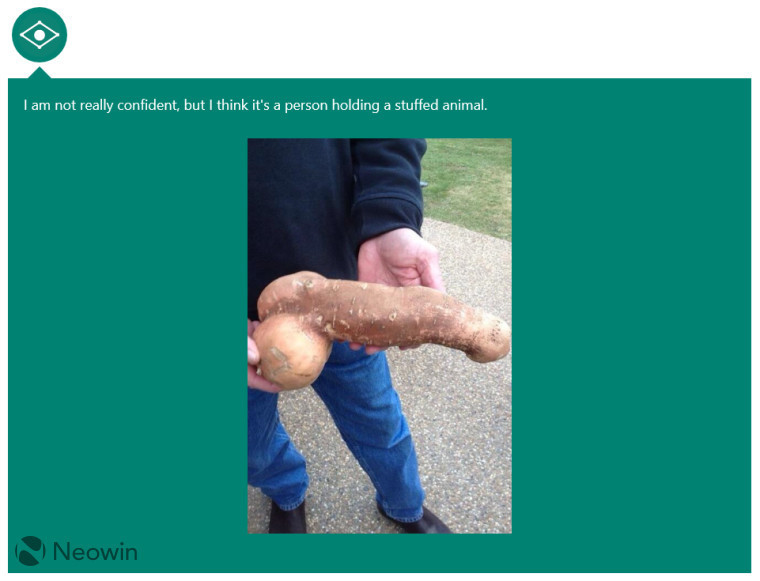

Like Seeing AI, CaptionBot attempts to provide a 'plain English' description of what it sees in any image that's presented to it, and for the most part, it does a pretty decent job of interpreting what's happening in the pics that it's shown:

There's certainly room for improvement though - and indeed, that's the whole point of this latest experiment. Users can rate the quality of the caption that's provided to help improve the machine learning capabilities of the bot - and the more images are uploaded, the more the AI will improve on those abilities.

As things currently stand, CaptionBot can't yet identify one of Microsoft's other products - its HoloLens augmented reality headset:

It also can't identify someone being assimilated by a Borg drone - no, CaptionBot, that's not a motorcycle:

And CaptionBot also struggles with unusually-shaped vegetables:

You can find out more about Microsoft's efforts in a blog post published today: 'Teaching computers to describe images as people would'.

Hit the source link below to take CaptionBot for a spin - and don't forget to provide a rating below the image once the caption is generated.

Source: CaptionBot

16 Comments - Add comment