Google Lens was originally announced at last year's Google I/O, introducing the power of image recognition provided by AI, which in turn lets users take certain actions on the scanned images, such as automatically enhancing them based on the subjects recognized by the software. This year's Google I/O is now underway, and so Google is announcing a set of improvements to the service just in time for the anniversary of its announcement.

For starters, the company announced that Google Lens, which so far has been available in Google Photos and Google Assistant, will be coming directly into the camera app. Considering that manufacturers tend to have their own custom camera app on their devices, one might think that this feature would be exclusive to Pixel phones, but it's actually coming to a number of partner OEMs, including LG's upcoming G7 ThinQ and phones from other partners such as Sony, Xiaomi, and Nokia. The company said that the feature would start hitting devices next week, which is presumably when the Pixel phones will receive it, while other phones might be waiting a little longer.

In addition to its new home, Google Lens is also getting more capable with three new major features announced today. First off, Lens will now be able to recognize text in pictures and make it available for selection, just as it would be done on any piece of text in an e-mail or website. This feature is called Smart Text Selection, and it should make it much easier to turn physical text into digital.

Style Match is another new feature for Google Lens, and it's meant to help users at times when they might want to find something that's not exactly the same as what they're seeing, but something with a similar style. Google exemplified this with a lamp as well as an outfit, and in the latter case, it was even possible to point at a specific piece of clothing or accessory to look up similar items.

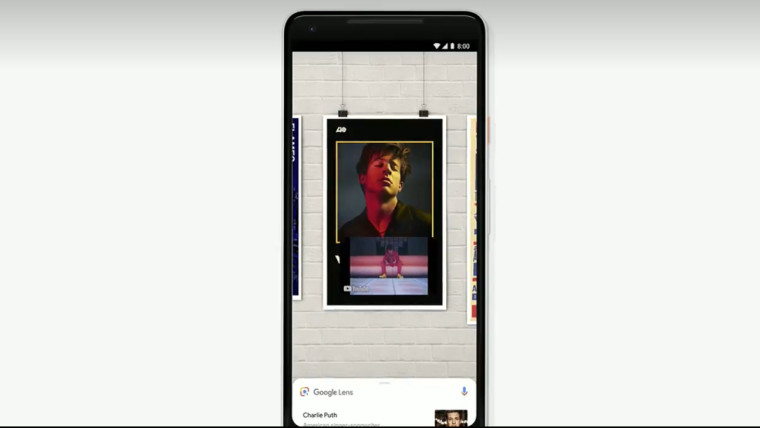

Finally, Google Lens will be able to provide real-time results in the camera app as it identifies the objects in the frame, leveraging machine learning and cloud TPUs, similar to how the lamp is identified and tracked in the picture above. But, over time, Google aims to have actual results overlay on top of the things the user sees through the camera. For instance, users can point at an album poster on the street and get a live preview of the most relevant music video from the album.

This particular feature should take some time to make it to users, but the others should be available in the near future as Google Lens spreads to camera apps across devices.

_small.jpg)

4 Comments - Add comment